30 Jan 2025

Представьте на мгновение, что на дворе 2124-й год, и Соединённые Штаты Америки кувыркаются на последних этапах затяжного падения. Наркокартели, расположившиеся вдоль южной границы, давно превратились в частные армии под командованием могущественных военачальников. Во время вспышки гражданской войны в США один из них объединяет под своим началом нескольких менее влиятельных боссов и проведя объединённые силы сквозь рушащиеся пограничные укрепления, захватывает большую часть Калифорнии, провозглашая её своим личным королевством. Остатки федерального правительства, перебравшиеся в Цинциннати после того, как повышение уровня моря превратило Вашингтон в солончаковые болота, слишком слабы и поглощены другими кризисами, чтобы оказать сопротивление.

А вот и они.

А вот и они.

Несколько десятилетий спустя тот же военачальник отправляется расширять своё королевство, двигаясь на восток через Аризону и Нью-Мексико, чтобы вторгнуться в Техас, где несколько уцелевших нефтяных скважин всё ещё дают нефть, в которой отчаянно нуждаются остатки Соединённых Штатов. В ответ жёсткий и безжалостный американский генерал — последний способный командующий, оставшийся у США, — собирает армию, состоящую в основном из западноафриканских наёмников, и начинает масштабное контрнаступление.

Начинается резня. В серии жестоких сражений силы военачальника разгромлены, его недолговечное королевство рушится, а сам он погибает под градом пуль. Это всего лишь один эпизод из многих в кровавых сумерках умирающей нации, ненадолго отсрочивший окончательный крах США. Однако он привлекает внимание менестрелей. В последующие века история военачальника, завоевавшего Калифорнию, становится ядром целого цикла легенд. Спустя более тысячи лет эти легенды вдохновляют создание одного из величайших произведений искусства в мире.

Современный образ Гундакара. Да, он был крут.

Современный образ Гундакара. Да, он был крут.

Измените несколько деталей, перенесите действие на столетия назад, во времена падения другой империи — и вот как рождается история, лёгшая в основу «Кольца Нибелунга». Военачальника звали примерно Гундакар. Он был королём германского племени бургундов, успевшего дать название части современной Франции, прежде чем их почти полностью не истребили. В 406-м году бургунды перешли Рейн на римские земли и основали там своё королевство. Сам Гундакар впервые упоминается в римских хрониках в 411 году, когда он и другой король-варвар поддержали одну из сторон в кровавой гражданской войне, терзавшей позднюю Римскую империю. В 413 году, после поражения своей стороны, Гундакар и его народ заключили сделку с ослабленными победителями, которая закрепила их власть над значительной частью римских территорий к западу от Рейна.

В 435 году Гундакар, видимо, решил, что этого недостаточно, и снова вторгся на римские территории, приблизившись к стратегическим районам северной Галлии. На этот раз ему противостоял более опасный противник: Флавий Аэций, последний способный полководец Западной Римской империи. Аэций родился в римской аристократической семье, но юность провел в качестве заложника в «варварских» землях — сначала при дворе короля вестготов Алариха I, затем при дворе Ульдина, короля гуннов, и его преемника Харатона. Этот опыт закалил его, сделав находчивым, умелым воином и стратегом, с близкими связями среди варварских племён и глубоким пониманием их обычаев. Всю свою карьеру, используя запутанные союзы той эпохи, он набирал и возглавлял армии из самих варваров.

Ещё более современный образ Флавия Аэция. Он был ещё суровее. (Да, сегодня он стал персонажем компьютерной игры Rise of Kingdoms.)

Ещё более современный образ Флавия Аэция. Он был ещё суровее. (Да, сегодня он стал персонажем компьютерной игры Rise of Kingdoms.)

Именно так он и поступил, когда Гундакар вторгся в Галлию. Аэций быстро собрал армию гуннов и выступил против него. В двух жестоких кампаниях 436-го и 437-го годов он разгромил бургундов, убив Гундакара и 20 000 его бургундских воинов. Выжившие сдались Аэцию и были переселены в регион к югу от Женевского озера, где быстро перемешались с местными племенами. Аэцию предстояло ещё множество битв, прежде чем император, которому он служил, приказал его убить — верность Римской империи в те дни была небезопасным выбором. Его победа над Гундакаром стала лишь одним эпизодом в кровавых сумерках империи, отсрочившим падение Запада на несколько десятилетий. Однако гибель Бургундского королевства привлекла внимание сказителей, и так родился цикл легенд.

Гунтер (как со временем стали звать этого вождя) привнёс в историю больше, чем просто кровавый конец. Согласно хроникам, он был Гибихунгом — то есть сыном или внуком более раннего бургундского короля Гибиха. Его также называли Нибелунгом. Сегодня точное значение этого слова в эпоху Гунтера неизвестно, но, вероятно, оно связано с современным немецким Nebel — «туман». Если так, то «Нибелунг» означает «дитя тумана». Отголосок забытой архаичной мифологии? Вполне возможно, но мы вряд ли узнаем наверняка. Запомните эти титулы — они ещё встретятся нам.

Устная традиция — штука странная. Она может сохранять осколки знаний из глубин времён: например, аборигены северного побережья Австралии и жители западных островов Шотландии хранят точные сведения о очертаниях береговых линий, существовавших 10 000 лет назад, когда уровень моря был на 90 метров ниже. Но при этом устная традиция так же бесцеремонно смешивает факты, если это помогает приукрасить историю. Мерлин, к примеру, почти наверняка был реальной личностью, но жил на два поколения позже исторического короля Артура и не имел к его недолговечному королевству никакого отношения; он служил Гвенддолау, последнему языческому королю низменностей Шотландии. Однако когда Арторий, римско-британский генерал, превратился в сияющий образ короля Артура, его легенда стала магнитом для других британских преданий. Мерлин — лишь один из многих когда-то отдельностоящих персонажей, втянутых в орбиту артурианы. Со временем легенды об Артуре вобрали в себя множество изначально независимых сюжетов.

Ни у кого нет ни малейшего представления о том, как выглядел Аттила Гунн. Но этот образ передаёт общее впечатление.

Ни у кого нет ни малейшего представления о том, как выглядел Аттила Гунн. Но этот образ передаёт общее впечатление.

Это же произошло и с историей Гунтера и падением Бургундского королевства. Гуннские наемники, свергшие Гунтера, позаботились о том, чтобы сказители, взявшись за дело, вплели в сюжет самого Аттилу Гунна. У них была отличная завязка для этой части легенды. После долгой жизни, полной завоеваний и грабежей, старый гуннский король женился на юной златовласой варварке, чье имя, согласно римским хроникам, было Ильдико. На рассвете после свадебной ночи он был мертв. Неизбежно поползли слухи, что она убила его — теории заговора существуют с незапамятных времен — и со временем это утверждение слилось с участием гуннов в падении Гунтера, породив легенду: Ильдико якобы была сестрой Гунтера и убила Аттилу, чтобы отомстить за брата.

Эта история, вероятно, имела хождение уже к концу V века. Имя Ильдико исчезло из преданий, а мнимой сестре Гунтера дали как минимум два других имени — Гримхильд и Гутруна. У него появился брат или кузен Хаген, о котором шла дурная слава. А еще у него появилось сокровище. Некоторые авторы предполагают, что оно у него могло быть и на самом деле, ведь варварские короли той эпохи имели доступ к богатой добыче, а во время заката Римского мира накопленные за века драгоценные металлы легко могли оказаться в руках удачливого авантюриста. Как бы то ни было, сокровище Гунтера стало центральным мотивом его истории. Поползли легенды, что Аттила убил Гунтера, чтобы завладеть сокровищем, но тот спрятал его так хорошо, что сокровище так и не нашли. Поздние сказители добавили, что Гунтер навеки скрыл его, кинув в Рейн.

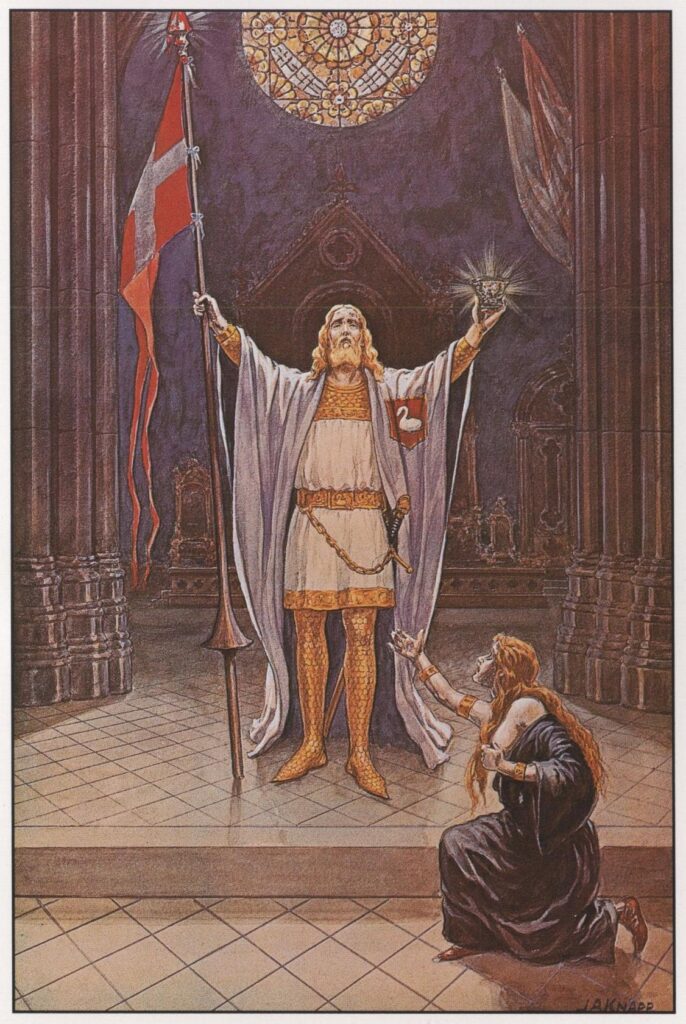

Брунехильда была чем-то вроде вот этого, но только с морем крови, заливавшими декорации.

Брунехильда была чем-то вроде вот этого, но только с морем крови, заливавшими декорации.

Прошел еще век, прежде чем в повествовании появилась Брюнхильда — следующий участник истории. Её настоящее имя было Брунехильда, и она была готкой — и нет, это не значит, что она носила чёрную одежду и жирно подводила глаза чёрным. Она была дочерью вестготского короля Атанагильда и родилась около 543-го года в Толедо, столице вестготов после их завоевания Испании. Достигнув брачного возраста, она вышла за Сигиберта I, короля Австразии — одного из четырех королевств, созданных франками на территории римской провинции Галлия. Её жизнь была долгой, запутанной и кровавой; если кратко: её сестра, выданная за короля соседнего Нейстрийского королевства, была убита нейстрийским королем по наущению его любовницы Фредегонды. Брунехильда ответила кампанией эпической мести.

В течение следующих нескольких десятилетий, продолжая кровавую вражду с Фредегондой, пережив убийство мужа и гибель множества других людей, а затем сполна расплатившись с противницей тем же, Брунегильда трижды правила Австразией — каждый раз в качестве регента при разных малолетних принцах — и каждый раз была свергнута. По её приказу были убито внушительное число врагов: согласно современным ей хроникам, среди её жертв только франкских королей числились десять человек, а также множество людей менее знатного происхождения. Когда же её наконец окончательно победили, то, в зависимости от того, какой хронике верить, победители либо разорвали её на части дикими лошадьми, либо тащили лошадью по каменистой горной дороге пока они не умерла.

Её железная воля, бурная жизнь и жестокая смерть гарантировали, что она станет легендарной фигурой. Предания о ней неизбежно отдалились от исторических событий и, дрейфуя сквозь годы, слились с легендой о королевстве Гунтера и его падении. Во время этой трансформации суровые реалии политической борьбы в Австразии эпохи темных веков канули в лету, оставив лишь несколько следов: имя героини, её необычайную силу воли, страсть к мести и тот факт, что её смерть была связана с лошадью.

Затем был Зигфрид. Его корни уходят гораздо, гораздо глубже эпохи варварских вождей, породивших Гунтера и Брунгильду, и, скорее всего, он вообще никогда не существовал как историческая личность. В мифах и легендах древней индоевропейской диаспоры, от Индии до Ирландии, разбросаны осколки древней истории о сияющем герое-боге, который сражается с жадным богом подземного мира, чтобы освободить магическое сокровище, связанное с плодородием земли. В древнейшие времена, когда индоевропейцы жили примерно там, где сейчас Украина, это, вероятно, был простой сезонный миф: герой — золотое солнце, рожденное во тьме середины зимы, взрослеющее и убивающее дух зимы.

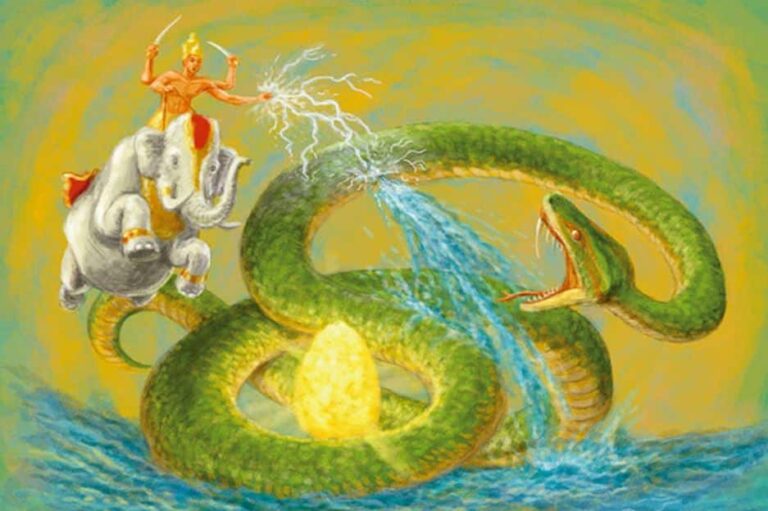

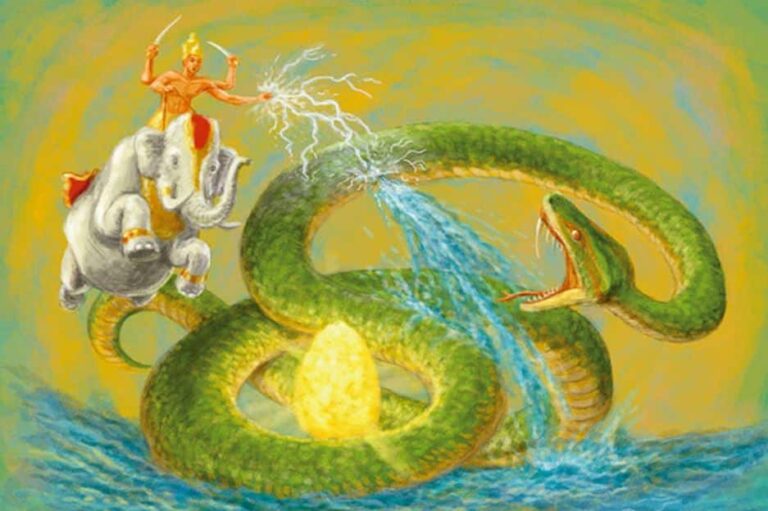

Индра поражает Вритру о освобождает воду.

Индра поражает Вритру о освобождает воду.

В Индии, где угрозой плодородию была засуха, а не холод, миф превратился в битву между богом-воином Индрой и мрачным змеем Вритрой, удерживающим воды. В иранском мифе аналогом Вритры стал Веретрагна, игравший схожую роль в до-зороастрийские времена. В славянских легендах этот же образ воплотился в бога плодородия Велеса, а в древнейших пластах римской мифологии — в Веиовиса, подземного Юпитера, повелителя скота и земных богатств, против которого громовержец Юпитер ведет сезонную войну.

Однако на холодном севере зимний дух сохранил ужасную змеиную форму Вритры, а роль убийцы драконов постепенно перешла к двум величайшим героям северных легенд — Беовульфу и Зигфриду. Если в других странах солнечный герой после победы восходил к славе и успеху, то северные предания приобрели мрачный оттенок. Здесь сокровище стало проклятием. Беовульф убивает своего дракона с помощью храброго юного Виглафа, но умирает от ран, и без его сильной руки, защищавшей народ, драконий клад привлекает столько набегов из дальних земель, что могучее королевство гётов гибнет навсегда. Что же до Зигфрида— об этом мы еще расскажем. Пока скажем лишь, что он встречает жалкую смерть вскоре после подвига с драконом, а завоеванное им сокровище теряется навеки.

Помните, как все в Средиземье устремились к Одинокой горе после гибели Смауга? Толкин понимал, о чём писал.

Помните, как все в Средиземье устремились к Одинокой горе после гибели Смауга? Толкин понимал, о чём писал.

Возможно, это просто отражение реалий, связанных с кладами драгоценных металлов в эпоху насилия. Богачи совершают множество ошибок на закате цивилизаций, но одна из самых распространённых — попытка сохранить богатство, превратив его в драгоценности и спрятав. Это лишь гарантирует, что каждый местный вождь, варварский отряд или группа повстанцев узнает: золото и украшения можно легко забрать, стоит лишь схватить богача с его семью и, начав с детей, пытать, чтобы выведать, где спрятаны сокровища. (Несомненно, что и нынешние поклонники драгметаллов со временем узнают это на собственном горьком опыте.) Смысл образа проклятого клада в подобные времена понятен — он преподаёт суровый практический урок. Однако, как мы увидим, с веками этот урок обрёл новые смыслы.

Так Зигфрид, убийца дракона, вырвавшись из мира сезонных мифов, вплыл в легенды, где встретил Гюнтера — обречённого короля с сестрой Гутруной, его мрачным братом Хагеном и сияющим сокровищем, отголоском золота летнего урожая, которое некогда освободил солнечный герой. Похоже, он и Брунгильда — величественно ужасная королева-мстительница — прибыли примерно в одно время. Менестрели тут же решили, что эти двое созданы друг для друга.

Версия средневекового рыцарского романа.

Версия средневекового рыцарского романа.

Вероятно, что к примерно 1000-му году, повествование обрело свою классическую форму. Оригинальная версия давно утрачена, но до наших дней дошли два объёмных произведения, основанных на ней: «Песнь о Нибелунгах» (нем. Nibelungenlied) в Германии и «Сага о Вёльсунгах» (исл. Volsungasaga) в Исландии. Эти версии отнюдь не идентичны. «Песнь о Нибелунгах» — изысканный рыцарский роман, который легко встал бы в один ряд с легендами о короле Артуре, тогда как «Сага о Вёльсунгах» — мрачная и жестокая история, наполненная почти непрерывной резнёй.

Сюжеты их также существенно различаются, хотя некоторые основные темы общи для обеих. Там есть Зигфрид (в исландской версии — Сигурд), герой-убийца дракона, рождённый вдовой после гибели его отца, Зигмунда. Зигфрид золотоволос, силён, красив и бесстрашен, его тело неуязвимо для ран — кроме неизбежного уязвимого места на спине. Там есть дракон, которого он убивает, и несметное золотое сокровище, взятое из логова чудовища. Там есть Брюнхильда (в исландской версии — Брюнхильд), женщина-воительница, обладающая сверхъестественной силой. Там есть Гунтер (в саге — Гуннар). Там есть Кримхильда (в саге — Гудрун), сестра Гунтера. Вот Хаген (или Хёгни), ждущий со своим копьём. И наконец, где-то вдали маячит Аттила Гунн (в «Песни о Нибелунгах» — Этцель, в «Саге о Вёльсунгах» — Атли).

В центре обеих вариаций — простая история. Зигфрид, только что победивший дракона, прибывает ко двору Гунтера и влюбляется в Гутруну (так сестру назовёт Вагнер). Гунтер хочет жениться на Брюнхильде, но та согласна выйти только за героя, а Гунтер не соответствует её требованиям. Гунтер обещает Зигфриду разрешить брак с Гутруной, если тот поможет ему завоевать Брюнхильду. Зигфрид соглашается, использует волшебный колпак из сокровищницы дракона, чтобы принять облик Гунтера, и совершает необходимые подвиги. Брюнхильда выходит замуж за Гунтера, Зигфрид женится на Гутруне — и кажется, всё идёт хорошо.

Куда более кровавая норвежская версия. (Да, в переводе того самого Уильяма Морриса. Его таланта хватило бы на два десятка менее одарённых людей.)

Куда более кровавая норвежская версия. (Да, в переводе того самого Уильяма Морриса. Его таланта хватило бы на два десятка менее одарённых людей.)

Брунгильда и Гутруна вступают в спор, как это сделали Брунехильда и Фредегонда. Выясняется, что воительницу победил не Гунтер, а Зигфрид; Брунгильда, кипящая яростью, вступает в сговор с Хагеном, который берёт Зигфрида на охоту и пронзает копьём уязвимое место на его спине, убивая. Брунгильда совершает самоубийство и вместе с Зигфридом сгорает на погребальном костре. Гунтер и Хаген забирают сокровище Зигфрида. Гутруна же выходит замуж за Аттилу Гунна и безжалостно использует его как орудие мести против брата и Хагена, манипулируя надеждой на сокровище дракона. Однако Хаген догадывается о её планах и сбрасывает золотое сокровище, добытое Зигфридом у дракона, в Рейн, где оно теряется навсегда. Затем— ну, если вкратце, то все погибают.

Если не считать последней детали, это совсем не похоже на историю Гундахара, варварского военачальника, и его недолговечного Бургундского королевства. Однако интересно, что все великие эпосы, рождённые в тёмные века Европы, заканчиваются именно так. Артуровский цикл, самый знаменитый из них, — это хроника грандиозного провала, золотого королевства, рухнувшего из-за личных недостатков тех, кто должен был его хранить. Французская «Песнь о Роланде» и валлийская «И Гододдин» повествуют о горьких поражениях, вызванных самонадеянной гордыней лидеров проигравшей стороны — и в обоих случаях эти лидеры являются героями повествования. Великий цикл подвигов датских воинов, от которого сохранился лишь фрагмент «Беовульфа», также рассказывает о золотых днях великого королевства и его кровавом конце. История, которую забытые барды сплели из судеб Гунтера, Брунгильды и древнего мифа о Зигфриде-солнечном герое, скроена из той же материи.

В европейских легендах финал всегда был предрешён. Подробности? Мы к ним ещё вернёмся.

В европейских легендах финал всегда был предрешён. Подробности? Мы к ним ещё вернёмся.

Тёмные века рождают эпосы, но не все они завершаются катастрофой. Можно вспомнить «Одиссею», хоть и созданную в мрачные времена после краха позднего бронзового века в восточном Средиземноморье, но которая заканчивается тем, что Одиссей триумфально возвращается домой. Однако холодный шёпот грядущей гибели, кажется, витал над европейским проектом с самых первых его шагов в постримскую эпоху. Когда «Песнь о Нибелунгах», «Сага о Вёльсунгах» и прочие памятники древнегерманской литературы вырвались из вековой безвестности и грянули, как гром, над головами Европы XIX века, они принесли с собой именно такое ощущение. В первоначальном замысле «Кольца нибелунга» Рихард Вагнер попытался это подправить, предрекая гибель только тех некоторых сторон индустриального общества, которые он лично ненавидел. Однако, как мы увидим, легендами было уготовано язвительно посмеяться последними.

Оригинал статьи: The Nibelung’s Ring: The Legends

27 Jan 2025

(Музыкальная тема: «Увертюра» из «Золота Рейна» Вагнера)

Несколько лет назад, когда я ещё вёл блог «The Archdruid Report», я вскользь упомянул, что если мне когда-нибудь надоест иметь большую аудиторию, я начну серию постов о тетралогии Рихарда Вагнера «Кольцо нибелунга». Это отчасти было шуткой, но только отчасти, и по разным причинам я решил осуществить эту задумку сейчас, не обращая внимания на то, как это отразится на количестве посещений этого сайта. Во многих смыслах мы живём в вагнеровские времена: смесь подлинного творческого гения и помпезного, эгоцентричного пустозвонства, определяющая искусство и жизнь Вагнера, в не меньшей степени характеризует западное индустриальное общество, хотя большинство его обитателей предпочитают об этом не задумываться. Чувство утраченного величия и надвигающейся гибели пронизывающее «Кольцо нибелунга», сегодня вполне отчётливо звучит в фоновом музыкальном сопровождении к нашей эпохе.

Из графической адаптации П. Крейга Рассела. Сколько опер вы знаете, у которых есть графические новелизации?

Для тех, кто не знает, «Кольцо нибелунга» (сокращённо Кольцо) — это цикл из четырёх взаимосвязанных опер, написанных немецким композитором и либреттистом Рихардом Вагнером (1813–1883). Русские названия таковы: «Золото Рейна», «Валькирия», «Зигфрид» и «Гибель богов». Вместе они образуют самое грандиозное творение западной традиции: симфоническую композицию для полного оркестра, исполнение которой занимает более четырнадцати с половиной часов, дополненную драматической поэмой аналогичного масштаба и всеми театральными деталями, необходимыми для постановки всей тетралогии как оперной драмы на протяжении четырёх вечеров.

И это лишь самый очевидный показатель масштабности Кольца, как культурного явления. Как некоторые читатели уже знают, а другие догадались по названиям, Вагнеровская драма основана на германской мифологии и легендах. Автор заимствовал сюжеты из двух версий одной и той же древней истории — «Песни о нибелунгах» из средневековой Германии и «Саги о Вёльсунгах» из средневековой Исландии, обе из которых восходят к кровавым событиям последних лет Римской империи.

Современная постановка Орфея. Стареет достойно.

Для опер того времени не было ничего необычного в заимствовании древних легенд. Самая старая сохранившаяся опера, «Орфей» Клаудио Монтеверди (премьера в 1607-м году), основана на греческой мифологии, и многие другие композиторы до Вагнера следовали по стопам Монтеверди. Однако обычно подход заключался в том, чтобы найти изящную историю, переложить её в стихи, добавить музыку — и готово. Вагнер действовал иначе. Он сам писал все свои либретто (так называют текст оперы) и готовился к задаче создания Кольца, погружаясь в мир древнегерманской мифологии, одержимо читая оригинальные тексты и поглощая каждую крупицу научной литературы по теме, которую мог выпросить, одолжить или украсть. Одним из результатов такого подхода является тот факт, что любой, кто знаком с Эддами и посетит постановку Кольца, заметит множество отсылок к древнескандинавской литературе.

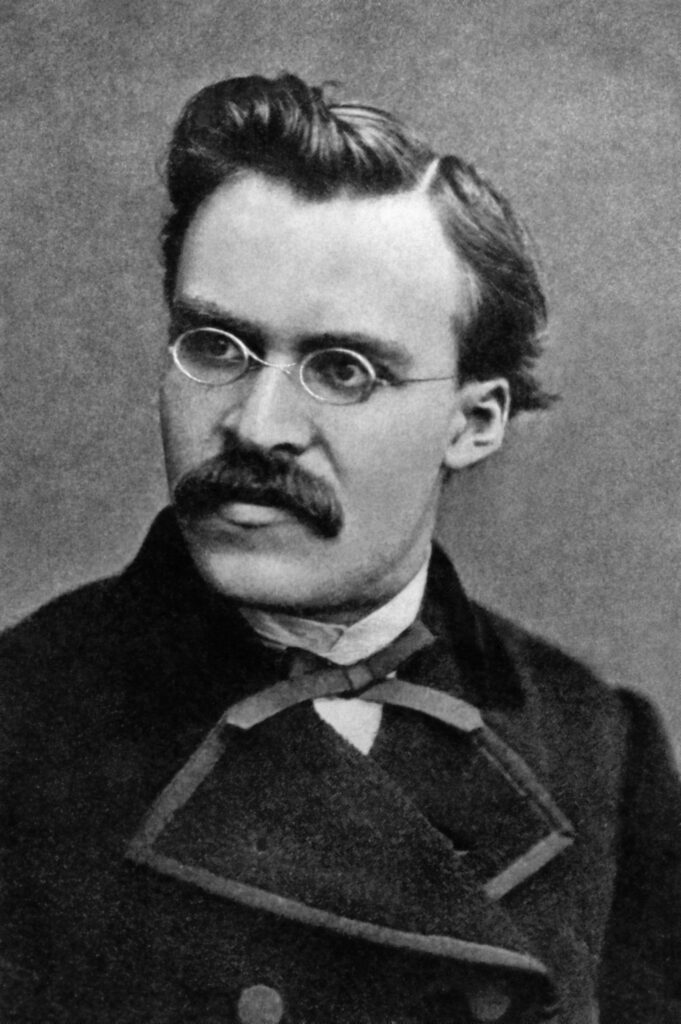

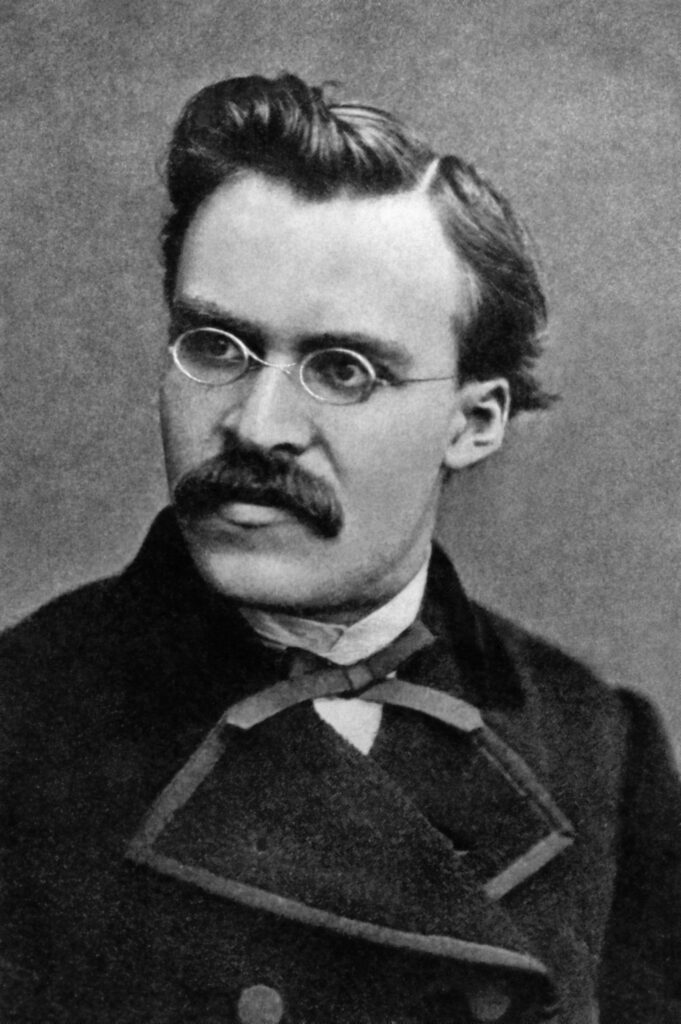

Фридрих Ницше. На него Вагнер повлиял глубоко.

Однако это не было единственным ингредиентом в рецепте Кольца. Вагнер… мы обсудим его отталкивающие стороны в скором времени, хорошо? Сначала я хочу обсудить причины, по которым Кольцо заслуживает внимания… так вот Вагнер, как я уже говорил, уникален среди великих композиторов тем, что на него в первую очередь влияли не музыканты. Два главных вдохновителя этих четырёх опер были философами. Многие знают, что Вагнер и Фридрих Ницше были близкими друзьями, и это действительно так, но Ницше не влиял на Вагнера; это Вагнер влиял на Ницше, и даже после разрыва сам Ницше неоднократно признавал в письмах, что годы дружбы с Вагнером стали для него одним из важнейших интеллектуальных опытов. Подобная оценка одним из самых влиятельных философов современности говорит о многом.

Главными философскими вдохновителями Вагнера были два других человека. Людвиг Фейербах, о котором сегодня почти никто не помнит, но чьи идеи пропитали всю нашу культуру, был ориентиром для молодого Вагнера, когда тот ещё только писал либретто для опер и начинал набрасывать музыкальные идеи. Вы знакомы с мыслями Фейербаха, даже если не читали ни строчки из его работ. Знаете идею из 1960-х о том, что если молодёжь сбросит бремя прогнившего общества, примет свободную любовь и мир, наступит новый золотой век? Это изобрёл Фейербах.

Людвиг Фейербах. Наденьте на него бусы и рубашку в стиле тай-дай — и он впишется в угол Хейт-Эшбери в 1966.

Поколения моложе моего скорее всего просто рассмеются, если им показать «Эпоху Водолея» из хиппового мюзикла «Волосы» — одну из самых экзальтированных современных интерпретаций этого видения. Я до сих пор помню, как многие искренне верили в подобные идеи. А ведь в 1841 году, когда Фейербах только ввёл эту идею, она ещё не была многократно опровергнута катастрофами, и оттого казалась даже более убедительной. Она впечатлила многих молодых радикалов, включая Вагнера.

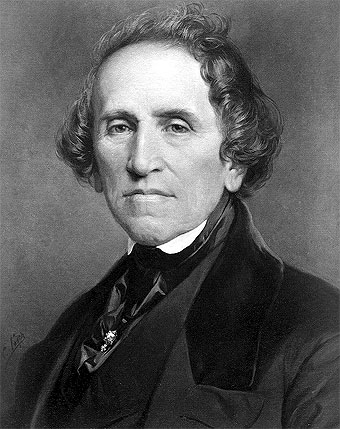

Для зрелого же Вагнера, когда он уже работал над музыкой для Кольца, путеводной звездой стала глубоко отличная мысль другого философа — Артура Шопенгауэра. Противоречия между этими двумя философами пронизывает все четыре оперы, и это значимо не только в музыкальном смысле. Фейербах был, среди прочего, политическим философом и оптимистом, верившим всей душой в грядущий прекрасный мир; Шопенгауэр — пессимистом, отвергавшим саму идею, что политические изменения могут изменить человеческую природу. Вопреки клише, Вагнер всю жизнь находился на крайне левом фланге политики (да, мы к этому тоже вернёмся), и десятилетия работы над Кольцом совпали с периодом, когда его собственные политические убеждения подвергались жёсткой проверке жизнью. Поразительно то, как он вплёл в свою музыку и это противоречие, и свою глубокую амбивалентность относительно его разрешения.

Артур Шопенгауэр. Среди прочего, он единственный крупный западный философ, серьёзно относившийся к азиатской философии.

Как результат, в Кольце Вагнер попытался символически изложить всю историю западной цивилизации от рассвета до заката. Каждая опера играет свою роль в этом грандиозном проекте. «Золото Рейна» обозначает основную проблему, используя богов, карликов и великанов германской мифологии как аллегории социальных классов и философских принципов. «Валькирия» и первые две трети «Зигфрида» суммируют всю западную историю до времени Вагнера. Последний акт «Зигфрида» и начало «Гибели богов» изображают современную Вагнеру эпоху, а остаток финальной оперы — пророчество о будущем.

Вам кажется, что перед вами один из тех «притянутых за уши» анализов, которые критики так любят навешивать на произведения? Да, подобные умозаключения можно счесть изощрёнными и напыщенными, но это вовсе не выдумка критика. Сам Вагнер написал целые тома о смысле Кольца. Он изложил всю историю концепции Кольца в трёх полноценных книгах и множестве писем. Будучи Вагнером, он писал постоянно, и его письма всегда касались только его самого, его работы, его идей и… что ж, здесь мы подходим к другой стороне Рихарда Вагнера.

Рихард Вагнер, одинаково исключительный как гений и как мерзавец.

Дело в том, что при всём своём гении он был жалким подобием человека. Он, кажется, родился, чтобы раз и навсегда доказать, что можно одновременно быть величайшим творческим гением и величайшим мерзавцем. Он обладал выдающимся интеллектом, способным уследить за последними тенденциями в фольклористике, философии, политэкономии и музыке, но ни разу не удосужился применить даже крупицу своего ума к собственным разрушительным привычкам, презрительным предрассудкам или чудовищно жестокому обращению со всеми вокруг.

Это был человек, любивший роскошь, которую, однако, не мог себе позволить, пока не нашёл эксцентричного короля, дававшего деньги без вопросов. До этого Вагнер занимал у всех, включая ближайших друзей, а потом бесился, если те вдруг просили вернуть долг. Он делал это так часто и упорно, что ему приходилось годами спасаться от полиции за границей. Другие его поступки было в том же ключе. Если бы вы были другом Вагнера, вам бы приходилось мириться с тем, что всё вертится вокруг него, а на вашу долю уготована лишь роль обожающего почитателя пред алтарём Рихарда Вагнера.

Георг Вильгельм Фридрих Гегель, размышляющий о своей божественности.

Конечно, в то время подобное было даже более распространено среди знаменитостей, чем сейчас. Для европейских интеллектуалов XIX века было обычным делом считать себя и свои идеи поворотным пунктом всей истории. Вагнеру, и впрямь, было далеко до Шарля Фурье, изобретателя социализма, верившего, что океаны превратятся в лимонад, как только все примут его философию. Или до Г.В.Ф. Гегеля, утверждавшего, что в нём Абсолют — божественная сущность всего — впервые обрёл самосознание. И хотя Вагнер и не достиг подобного космического уровня наглости, его эго было тем не менее столь грандиозным, что рядом с ним Дональд Трамп выглядел бы скромником.

И именно поэтому мы точно знаем, какой смысл Вагнер вкладывал в Кольцо. Он был настолько убеждён во всемирной значимости любой его мысли, что документировал буквально всё в письмах, эссе и книгах, дабы ни одно золотое слово не потерялось для потомков. Это рай для биографов и критиков, изучающих контекст. Вам не нужно гадать, что Вагнер думал о чём-либо — он сам всё рассказывал. К ужасу трудившейся над его публичным имиджем жены, и его друзей, он совершенно не умел фильтровать свои слова; когда какая-либо мысль приходила к нему, он высказывал её, обычно в печати и как можно публичнее. Если люди обижались — что ж, для Вагнера это лишь доказывало их неправоту.

Джакомо Мейербер, куда более приятный человек, чем Вагнер. Его оперы больше не ставят — они были популярны, но не так хороши.

Что придавало его более чем гироскопическому эгоцентризму горький привкус, так это озлобленность. Для него его талант был настолько очевиден, а музыка — настолько важна, что он быстро пришёл к выводу: единственная причина, по которой люди не ценили это так же высоко, как он сам, заключалась в заговоре, работавшем денно и нощно, чтобы его принизить. Я уже упоминал, что он был параноиком? Он был параноиком. Поэтому он постоянно нервно поглядывал на окружающих, пытаясь уловить, не проговорятся ли они о чём-то таком, что выдаст их участие в зловещем заговоре против него.

Между прочим, он был яростным антисемитом и столь же яростным франкофобом. Оба этих предрассудка были широко распространены в Германии его эпохи, но Вагнер воспринимал их лично — по причинам, вытекающим из уже упомянутых пунктов. Так сложилось, что в молодости, когда он как композитор пытался пробиться наверх, самым влиятельным оперным композитором мира был Джакомо Мейербер, который, как нарочно, оказался евреем. Кроме того, в то время евреи всё ещё доминировали в ростовщическом бизнесе в большей части Европы. Вот и получился Вагнер: убеждённый в своем подавляющем превосходстве над Мейербером, он объяснял богатство и славу последнего лишь заговором, а тот факт, что ростовщики, у которых он занимал деньги для своей расточительной жизни, требовали возврата долгов, — тем же самым. Слюнявые тирады о «еврейских кознях» следовали за этим как ночь за днём.

Его ненависть к французам имела схожие корни. В молодости он отправился в Париж, будучи уверен, что все мгновенно признают, насколько его оперы превосходят творения других. Думаю, вы можете представить, что парижские композиторы, журналисты и оперные критики подумали об этом. Он так и не простил им того, что они не пали перед ним ниц.

Всё это невозможно игнорировать, говоря о Вагнере. Всё это подробно исследовано в десятках его биографий. Впрочем как следующий вопрос, который нам предстоит затронуть, а именно, что почти через десятилетие после смерти композитора у Вагнера появился один бледнолицый австрийский фанат. Так точно, здесь на сцене появляется Адольф Гитлер.

Никогда не судите об артисте по поведению его фанатов.

Гитлер был одержим Вагнером с самого детства. Согласно Августу Кубицеку, одному из немногих его друзей в юности, именно после просмотра ранней оперы Вагнера «Риенци» Гитлер внезапно заявил, что однажды пойдёт в политику. Ситуацию усугубило то, что, когда Гитлер прошёл путь от неудачливого художника до солдата, политического активиста и, наконец, рейхсканцлера, Винифред Вагнер — англичанка, вдова сына Вагнера Зигфрида — безумно влюбилась в фюрера. Она отчаянно хотела выйти за него замуж. Он так и не сделал ей предложения (это отдельная мутная история), но на протяжении всех двенадцати лет существования рейха Гитлер мог рассчитывать на тёплый приём и раболепное пресмыкательство со стороны семьи Вагнеров и Байрёйтского театра.

В результате многие автоматически полагают, что Вагнер был нацистом, «протонацистом» или как минимум запятнан любовью нацистов к его операм. Au contraire: большинство нацистов не находили времени для оперы — тщетные попытки Гитлера заставить подчинённых посещать Вагнера стали в нацистcкой партии поводом для насмешек. Что же до самого Вагнера, он находился на противоположном конце политического спектра. По меркам своего времени, в молодости он был ярым левым радикалом. В зрелые годы, уже убеждённый своим горьким опытом, он хоть и сохранил левые идеалы, но разуверился в возможности их реализации через политический процесс.

Михаил Бакунин, дружбан Вагнера в контркультуре 1840–х.

Ультралевая радикальность Вагнера была настолько затушевана невежественными комментаторами, что стоит подчеркнуть это особо. Большинство из тех, кто хоть что-то слышал об анархизме, знают о Михаиле Бакунине; для остальных же поясню: он был самым влиятельным теоретиком и активистом анархизма XIX века. Он и Рихард Вагнер были близкими друзьями и сражались бок о бок на баррикадах во время провалившихся европейских революций 1848–1849 годов. Участие Вагнера в одном из этих восстаний было настолько значимым, что после его разгрома Вагнеру пришлось бежать через границу в Швейцарию пряча свою голову от назначенного за неё вознаграждения.

Затруднение в понимании политических взглядов Вагнера современниками связано с тем, что его идеи сформировались под влиянием забытого мира домарксистского социализма. Марксисты тщательно замалчивают этот период, поскольку он предлагает альтернативы мрачному тоталитарному государству, возникающему при воплощении марксизма на практике. Сторонники политико-экономических систем правее марксизма также заинтересованы в забвении этих альтернатив: это позволяет им указывать на чудовищные провалы марксизма, чтобы атаковать левых оппонентов. Удобно для обеих сторон.

Впрочем, это не означает, что домарксистский социализм работает лучше марксистского. Как убедился Вагнер после 1848–1849 годов, а многие из нас — после 1960-х, идеи Фейербаха и других идеологов того движения оказались нежизнеспособными в реальном мире. Однако это две разные нежизнеспособности: домарксистский социализм провалился, потому что он попросту не работал на практике; марксистский социализм провалился, потому что на практике он превращался в серую бюрократическую тиранию, удерживающую власть через лагеря и массовые расстрелы. Оба провала закономерны, и мы ещё вернёмся к этому, особенно потому, что в «Кольце» Вагнер указал на главную слабость знакомого ему радикализма.

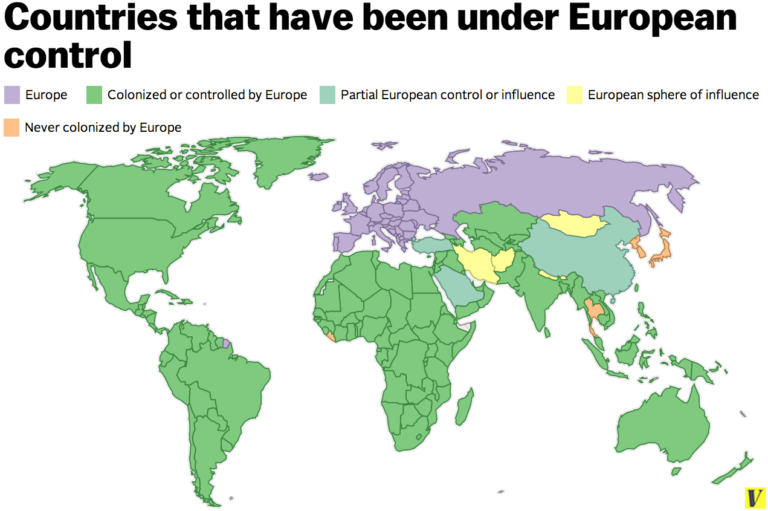

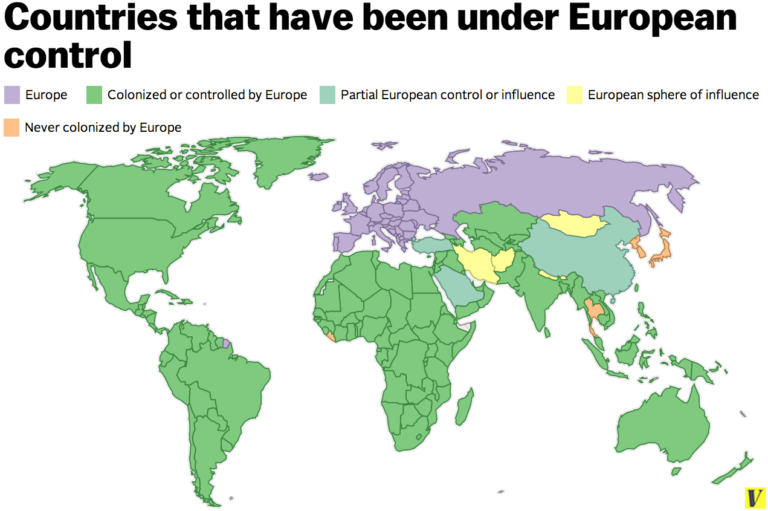

Страны бывавшие под европейским контролем. Да, и мирным этот процесс тоже не был.

Но «Кольцо» — это не только политическая экономия, а Вагнер — не просто грандиозный придурок, который оказался блестящим творцом. В каком-то очень реальном смысле Рихард Вагнер — это тройной перегонки, очищенный угольным фильтром концентрат Европы своей эпохи, зенита европейской глобальной империи — крупнейшей в истории планеты и одной из самых жестоких и хищных. В своем неугомонном творческом гении, поразительных прозрениях во всем, кроме самого себя, фантастической надменности и грубой жестокости по отношению к окружающим, он стал идеальным символом всего европейского проекта. «Кольцо нибелунга», в свою очередь, представляет собой последовательную попытку — наиболее значимую в истории западного искусства — охватить траекторию глобального европейского проекта в целом и спроецировать его в будущее, уже активно формировавшееся в эпоху Вагнера.

Но европейский проект не желал заканчиваться чем-то хорошим. Для того чтобы научиться уживаться с этим Вагнеру понадобились годы. Завершив оперное воплощение прошлого и подступившись к настоящему и будущему, Вагнер едва не отказался от всей задумки. Величественное видение будущего свободных духом и любовью, которое он почерпнул у Фейербаха, Бакунина и бурлящего котла европейской контркультуры, породившей провалившиеся революции 1848–1849 годов, мечта, направлявшая первую половину его жизни, — именно на этом он изначально хотел завершить «Кольцо». Потребовались годы и множество периодов клинической депрессии, когда он неоднократно задумывался о самоубийстве, чтобы его собственная интуиция наконец убедила его: подобное будущее невозможно.

«Парсифаль» в книге Мэнли П. Холла «Тайные учения всех веков». И других опер там тоже не сыскать.

Что касается того, как он представлял себе возможное будущее, об этом мы ещё поговорим. Вопрос усложняется тем фактом, что Вагнер написал ещё и пятую оперу — «Парсифаль», своё последнее и, возможно, лучшее произведение. В ней он подхватил темы «Кольца нибелунга», переосмыслил их в новых формах и завершил всю сагу так, как не мог даже себе представить, когда только начинал работу над «Кольцом». Параллели настолько точны, что можно смело считать «Парсифаль» пятой оперой цикла «Кольцо нибелунга». Но и это мы разберём в своё время.

Впрочем, прежде чем добраться до этого, предстоит многое обсудить. В следующей части мы поговорим о легендах и мифах, которые стали для Вагнера материалом для опер, об исторических событиях, породивших их, и о колоссальном влиянии — как благом, так и губительном — которое эти легенды оказали, вновь всплыв на поверхность в эпоху Вагнера. Следите за новостями.

Оригинал статьи: The Nibelung’s Ring: Prelude

А вот и они.

А вот и они. Современный образ Гундакара. Да, он был крут.

Современный образ Гундакара. Да, он был крут. Ещё более современный образ Флавия Аэция. Он был ещё суровее. (Да, сегодня он стал персонажем компьютерной игры Rise of Kingdoms.)

Ещё более современный образ Флавия Аэция. Он был ещё суровее. (Да, сегодня он стал персонажем компьютерной игры Rise of Kingdoms.) Ни у кого нет ни малейшего представления о том, как выглядел Аттила Гунн. Но этот образ передаёт общее впечатление.

Ни у кого нет ни малейшего представления о том, как выглядел Аттила Гунн. Но этот образ передаёт общее впечатление. Брунехильда была чем-то вроде вот этого, но только с морем крови, заливавшими декорации.

Брунехильда была чем-то вроде вот этого, но только с морем крови, заливавшими декорации. Индра поражает Вритру о освобождает воду.

Индра поражает Вритру о освобождает воду. Помните, как все в Средиземье устремились к Одинокой горе после гибели Смауга? Толкин понимал, о чём писал.

Помните, как все в Средиземье устремились к Одинокой горе после гибели Смауга? Толкин понимал, о чём писал. Версия средневекового рыцарского романа.

Версия средневекового рыцарского романа. Куда более кровавая норвежская версия. (Да, в переводе того самого Уильяма Морриса. Его таланта хватило бы на два десятка менее одарённых людей.)

Куда более кровавая норвежская версия. (Да, в переводе того самого Уильяма Морриса. Его таланта хватило бы на два десятка менее одарённых людей.) В европейских легендах финал всегда был предрешён. Подробности? Мы к ним ещё вернёмся.

В европейских легендах финал всегда был предрешён. Подробности? Мы к ним ещё вернёмся.